With the involvement of artificial intelligence and machine learning in nearly every sector, there will be lots of discussion on how governments will manage the implications and usages of the emerging technologies. Governments agencies around the globe have begun looking at the regulations needed to be put in place in order for both the individual agencies and industries to implement the technologies properly. These implications could include addressing the ethics of such use, liabilities of its decisions, impacts on jobs, and much more. This is an important step in the development of artificial intelligence as a whole in its implementation life cycle.

Military and EU AI Act

The United States government has proposed a set of guidelines to be followed by the military in their use of AI and automated systems, as covered by Matthew Gooding in an article for Tech Monitor. This was put out during a summit covering the topic in the Netherlands. The declaration was put out in an attempt to encourage US allies in the EU to follow along and lay out standards in which the use of such technologies in the defense space is done so in a responsible matter.

The Department of Defense actually invested $874 million in artificial intelligence technologies within its budget layout for 2022. This massive investment in the implementation of AI has many impacts in such a fresh space. This can come with a lot of different factors which could bring up a lot of questions about its ethics, including the idea that other countries will be developing such technologies as well. This is why the Department of Defense has put out this declaration, as the EU has been working to pass a very debated AI Act. This act would regulate the use of automated systems across Europe.

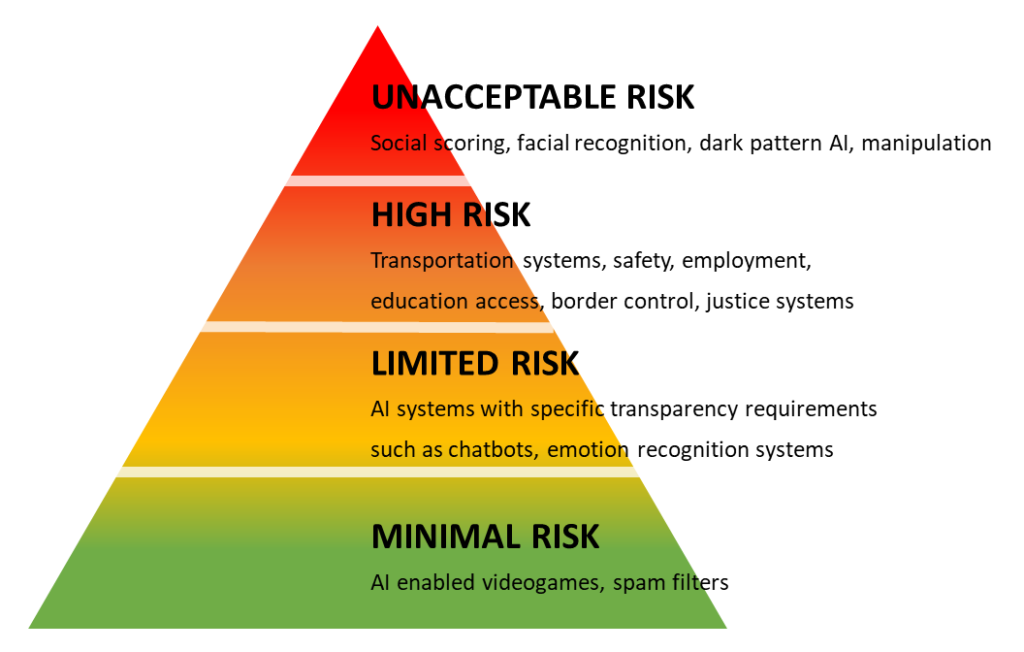

This act would be the first law on AI by a major regulator anywhere in the world, according the EU themselves. This law would assign AI implications into three risk categories, where applications would either be banned, highly regulated, or not regulated at all. This approach has its troubles, regarding what constitutes when an AI application becomes “high risk”. Chat GPT has been brought up in its current wide use and popularity, and according to the EU commissioner Chat GPT or generative AI would have provisions included in the Act in order for their uses to remain open for the public, but controlled in its certain use cases. Generative AI has the ability to write hate speech or fake news which could be problematic for many reasons, therefore this aspect would need to be looked at extensively before passing the law. There are many loopholes which would also allow the “low risk” category to be exploited, as these items would not be under any sort of governance. The AI Act is projected to be passed within the coming months, once many specific details are ironed out.

New York AI Governance Efforts

On a more centralized effort, the New York State Comptroller’s office did an audit of their development and use of AI tools and systems over the past three years. They decided to audit their own state’s office of Technology and Innovation in New York City in order to establish a proper governance structure over the continuous development and use of AI. New York City uses AI in different agencies including the NYPD, which uses AI powered facial recognition, and in the Administration for Children’s Services, using AI prediction for children facing future harm to prioritize cases.

The audit found that NYC does not have an adequate AI governance framework in place. There are only laws in place for agencies to report the use of AI, but no regulations on the use. The government would like for a central regulation and guidance on its use in order to ensure “that the City’s use of AI is transparent, accurate, and unbiased and avoids disparate impacts.” This audit was done in order to prevent any issues and potential liabilities that could arise from the irresponsible use of AI and is a great way to lead the charge for other governments to follow.

Potential Unemployment and Tax Methods

Another big hurdle to tackle in the political space is automation’s threat to jobs. US politicians are starting to bring up ways to deal with the potential unemployment issue that could come from the widespread implementation of AI and automation. Bernie Sanders is one of those politicians as he discussed this idea in a recent book he released. As covered by Tristan Bove of Fortune, Sanders discusses the idea of taxing companies who replace current workers with automation on a larger scale. Bill Gates has also been apart of the discussion to tax corporations who make these changes. “If workers are going to be replaced by robots, as will be the case in many industries, we’re going to need to adapt tax and regulatory policies to assure that the change does not simply become an excuse for race-to-the-bottom profiteering by multinational corporations,” as written by Sanders in his book, It’s OK to be Angry About Capitalism.

President Biden’s administration sees it more along the lines of replacing factory jobs with the fast-growing job market in the tech industry. This shift in skills is still many years in terms of adjustment and would still result in an unemployment problem from widescale job cuts. There would have to be much middle ground to form between fully automated tasks and involving human workers in the process. Taxing the companies out of doing such tasks may not be the outcome, as cost savings from much lower labor expenses may still have companies benefiting. There are many ways to look at this scenario, but we will have to wait and see if these projections actually even come true. This idea of widescale implementation to the point of an unemployment crisis, is still only a speculation of forecasting the technology and different industries.

Sources