After hearing about how artificial intelligence can be used to create music, I was instantly worried about musicians and their careers. Musicians are not working in a recession-proof industry, so economic struggles of any severity are particularly worrisome for people whose careers are in music. Looking back at the history of the music industry, it is interesting to see how musicians always seem to prevail. For example, when the radio was first introduced, musicians were worried that they would lose a major revenue stream because people would no longer need to see them live to hear their music. When Napster was introduced in 1999, musicians were once again terrified that their career pathway was doomed because listeners could simply listen to their music for free via the internet. There are a few other historical instances of musicians’ jobs being threatened, but as you can see from these examples, the music industry remains alive despite encountering various alarming technological disruptions.

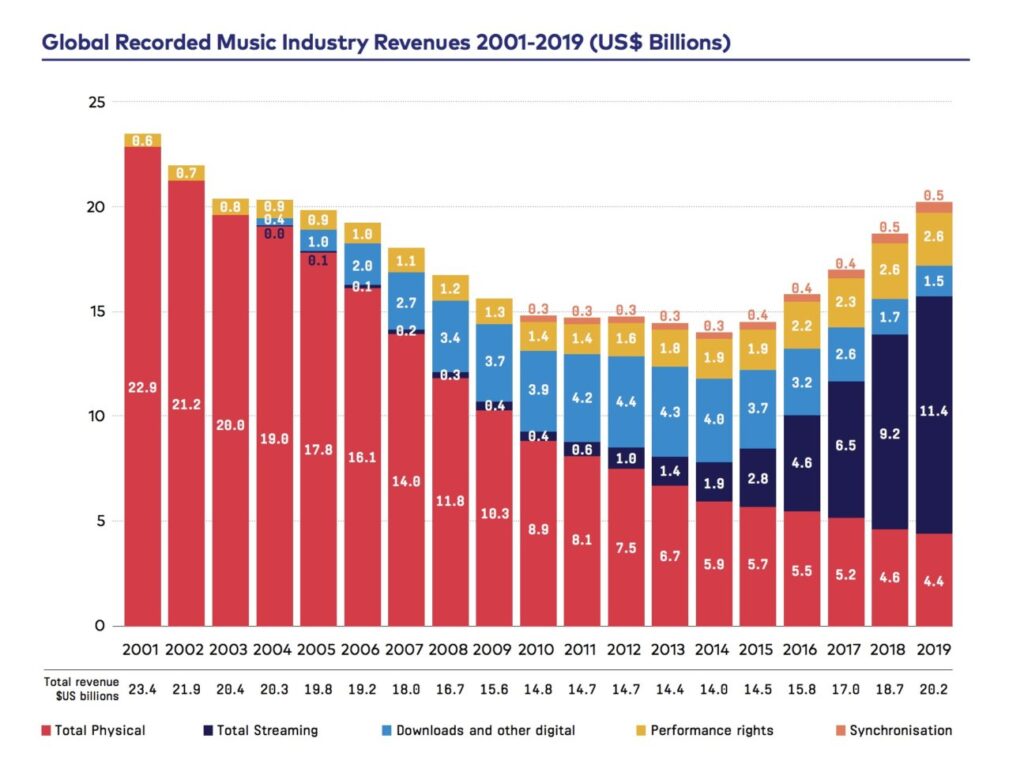

Even when the industry experienced a trough in 2014 that was a direct result of the digitization of music consumption, it immediately bounced back after sites like Grooveshark were shut down (RIP) and consumers had various digital service providers (DSPs) to choose from. Apple Music, Spotify, Tidal, and other platforms revitalized the music industry’s ability to bring in revenue, and the musicians dodged another bullet. Streaming services still pay artists very little for each individual stream, and many artists are busy working to change this. Low streaming royalties remain a problem as of 2023, but musicians may need to turn their attention towards another imminent threat: artificial intelligence’s ability to make music. Most of us have already heard about AI voice replication. It is surprisingly accurate and has tricked me on multiple occasions. Voice replication can be used in conjunction with a site like ChatGPT to create an entirely new acapella with original lyrics. Furthermore, AI music generators can create the music itself. When all three of these elements are joined together, a song is created. Anyone including those who know nothing about music theory can do this, so should musicians be worried?

Art is something people make to present to other people to try and elicit an emotional response. At its most basic level, music consists of sound waves, and sound is produced when an object vibrates. Our ability as humans to tame this chaos that we call noise and turn it into an orderly arrangement that is music has always been fascinating to me, and now it can be done by artificial intelligence. An example of this complicated process will help anyone that is confused gain a better understanding of how it works.

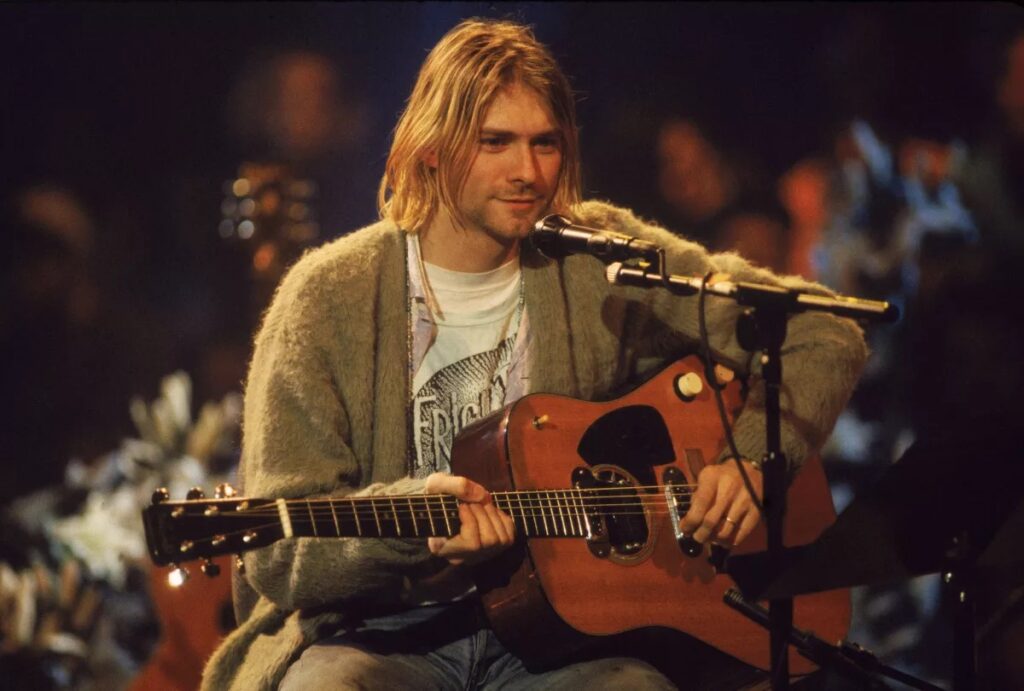

In 2021, Sean O’Connor used Magenta, which is a Google AI program, to create a song in the style of Nirvana. The program analyzes a set of works and can compose music in the artist’s style. After examining all the intricacies, patterns, chord changes, drum sounds, and lyrics of around 30 songs, the AI program has a basic understanding of how an artist would create a song. For the Nirvana project, Magenta read the songs as MIDI files, which are translations of a song’s notes and rhythm into digital code that can be put through a synthesizer to play the song (Rolling Stone). The computer then reads every detail of the composition and creates music that people can select the best parts of. Taking 20-30 songs and separating each into the solo, hook, and verses is a necessary step because the computer struggles with reading entire songs. After someone selects the best parts of the computer’s creations, they are joined together and audio effects are added to finish the production process.

When creating the lyrics, an artificial neural network was used to scan through many of Kurt Cobain’s songs and output new lyrics in his style. It took multiple pages of generated lyrics to find phrases that matched the Magenta-produced vocal melodies. The finished product is here: Drowned in the Sun (impressive, right?). After reading about this complex process, I am surprised by the amount of human intervention required to create a song made by artificial intelligence. The computer can create music in the style of an artist, but a majority of it is awful and requires the human ear to discern what is listenable.

My main takeaway after reading about how artificial intelligence creates music is that it is still dependent on humans. Similarly to how we can not predict a hit song, artificial intelligence can not create songs on demand that we think are good. When creating a song with Nirvana’s sound, there were plenty of sounds that the computer was easily able to produce. However, it took people hours of listening to select the best parts. From there, a person had to mix and master the song to finalize everything.

Giving artificial intelligence a clear framework of what to create works somewhat well, but what if it was given free rein to create whatever it wanted to create? Would an entirely new genre of music be introduced if we were to let it produce music on its own? These are all questions we might have the answer to over the next few years, and for now, I think musicians are safe. There is no real ethical concern for musicians that are using artificial intelligence, and those that are using it are using it as a co-creator (Science Focus). It is easier than ever to create music given our current state of technology, and there are successful modern artists, such as Steve Lacy, that have produced hits only using their smartphones (Wired). Artificial intelligence might enable more people to make music, and I see this as a good thing. It would also be fascinating to see what artificial intelligence could create if it were prompted to blend multiple genres together. All things considered, musicians should not be worried about artificial intelligence taking their jobs.