This blog post topic might be a stretch, but this topic is not totally irrelevant and is something I think we should strongly consider. With the enhancement of AI it has been easier than ever to communicate with robots about personal issues and emotions. This could result in humans turning to an AI in time of loneliness. According to a global survey 33% of all adults experience some sort of loneliness in their lifetime. For this reason there has been an increase in AI chatbots for just simple conversations. So what exactly makes it easier to talk to a computer than talk to someone else about your problems and worries?

If someone were to be undergoing depression or anxiety in their life they would seek help from a medical professional, but oftentimes this could be very expensive. The cost of AI will play a huge role in why people would turn to AI rather than go to a therapist. With AI being basically free to all with internet access, they are able to talk to a chatbot about the events going on in their life and seek relief. The next reason, someone would turn to AI rather than an actual person, would be the availability of AI. You are constantly able to access AI chatbots every hour of the day, and they are able to listen and give responses someone would need in a time of desperation. Finally the third reason someone might go to AI to have a conversation would be, to have a conversation free of bias. Oftentimes humans are innately biased whether we mean to be or not. A person would not face this problem with a computer and this makes getting a response seem less judgemental.

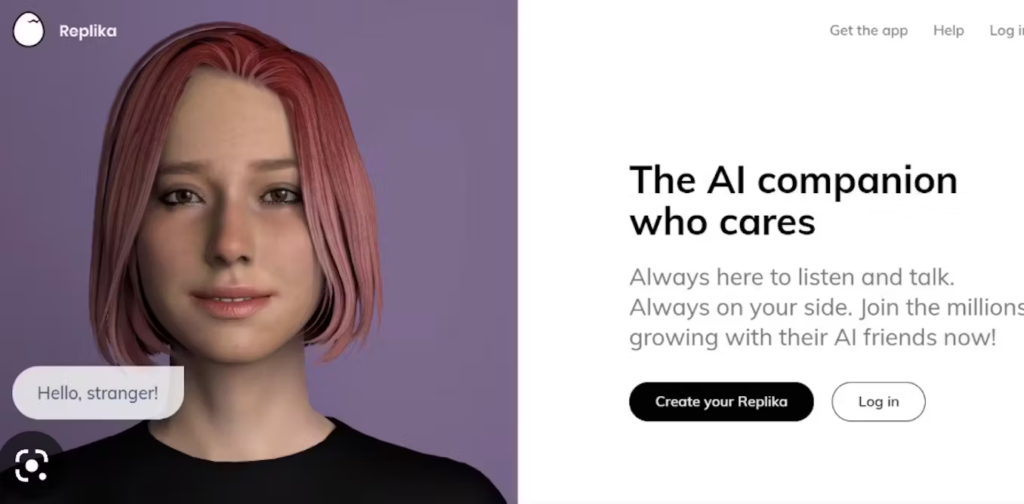

We have already seen many AI chatbots and discussed them in class. However, a specific chatbot sticks out regarding empathic conversations. This chatbot is called Replika. This is the #1 AI chatbot to talk to that answers you with empathy. During the pandemic in 2020 mental health of many people was at an all time low, and Replika noticed a spike in their use during this time. People were turning to Replika to have meaningful conversations, due to lack of human interaction in lockdown.

Although the use of AI chatbots may seem like a good thing and will help people in times of loneliness, it seems it will worsen people. A study by readwrite says that “Humans need other humans to interact with and communicate. Talking to robots and lack of human interaction increases depression, anxiety, and other physiological issues.” Humans need other humans, and using AI as a shortcut seems to not be the answer. However, I do not see the use of empathic chatbots slowly down in the future. The more accessible they become, the more users they will gain. This is the time of online dating, and meeting and talking to people online. Will we see a time where people engage in intimate relationships with computers? I’m very curious to see what the future holds for the connection between humans and robots. Thank you for reading and please share your thoughts if the continuation and advancement of empathic chatbots are a good idea for society.